SOC 2 Compliant

SOC 2 Compliant PCI DSS Certified

PCI DSS CertifiedAgentic AI for Financial Services and Insurance

Layerup's Agentic OS orchestrates enterprise systems—from internal software and data infrastructure to ML models and on-prem apps—to deploy autonomous AI agents and deliver end-to-end automation across Banking, Financial Services, and Insurance.

Integrated AI Agents That Take Actions in High-Impact Workflows

Layerup's AI Agents streamline communications and enhance operational efficiency for financial institutions across mission-critical workflows.

Phone

Handles real-time voice interactions with reasoning, speech intelligence, and compliance.

Text and Email

Orchestrates multi-channel communication with intelligence, personalization, and precision.

Reason

AI Agents can do advanced reasoning to mission-critical workflows for accurate, adaptive decisions.

Take Action

Executes complex workflows end-to-end, driving outcomes seamlessly across channels.

Custom AI Agents Tailored for Financial Institutions

Layerup's forward-deployed engineers adapt AI agents to your business needs, not the other way around.

Voice AI for Collections

Streamline your collections process with intelligent, human-like AI interactions.

AI Agents for Claims Operations

Streamline claims processing with AI-powered voice interactions that guide customers through submissions, provide real-time status updates, and automate routine inquiries.

Inbound & Outbound Voice AI

Enhance customer experiences with voice AI agents handling calls, offering timely assistance for complex inquiries and updates.

Customer Service AI Agents for Financial Institutions

Offer expert assistance for complex financial products with AI solutions tailored for financial services. Improve customer experience and reduce resolution time.

Loan & Mortgage Application Support

Conversational AI can guide applicants through missing documents & next steps (e.g., "You still need to upload your W-2") via text, email, or phone.

Custom Workflow AI Agents

Get in touch to learn how our forward-deployed engineers can deploy custom AI agents on top of your knowledge ontology and SOPs.

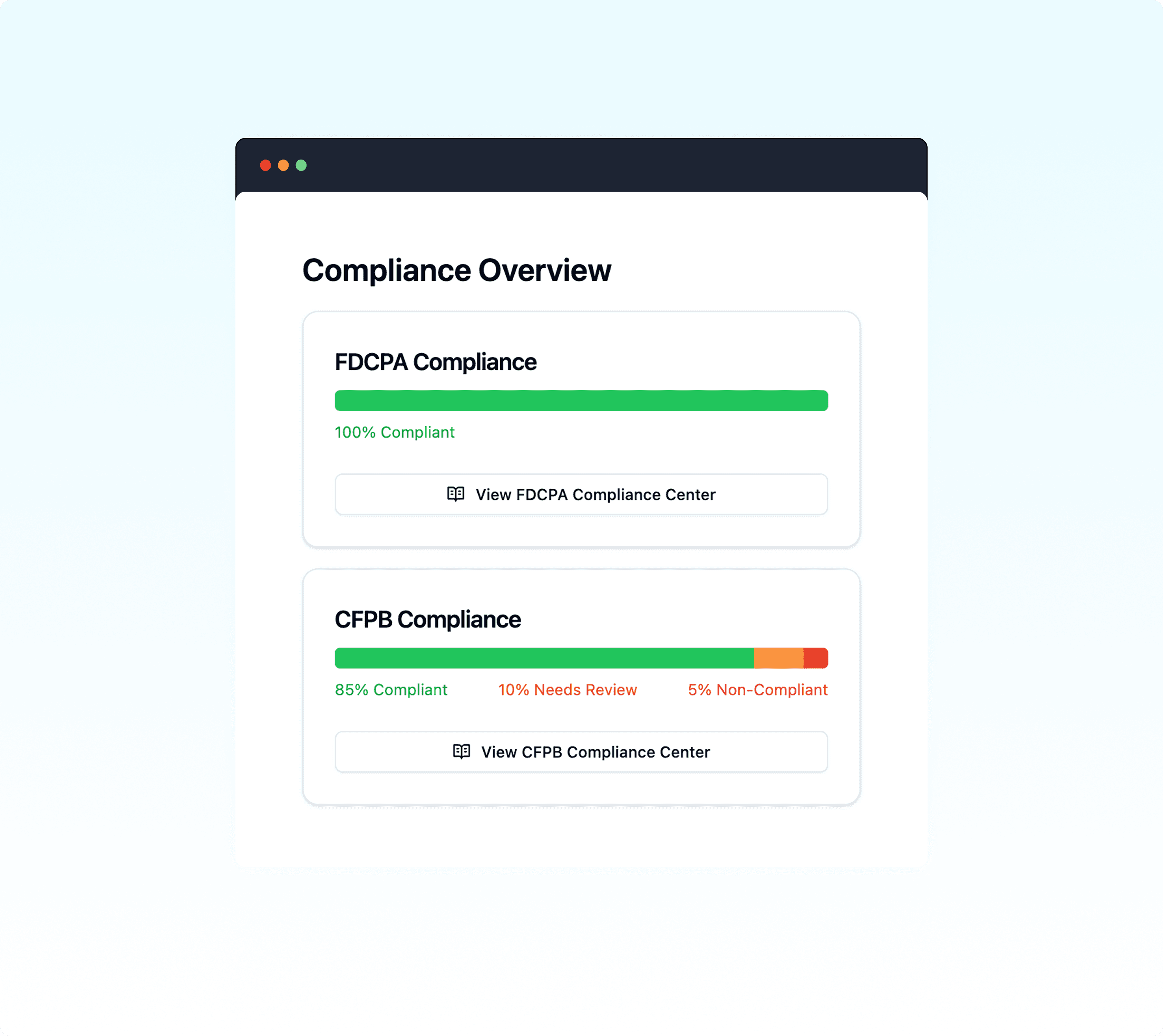

Industry-leading compliance and QA

End-to-end compliance, without compromise. Our rigorous QA framework ensures every interaction—AI or human—is consistently monitored, optimized, and aligned to maintain the highest standards of reliability, security, and regulatory alignment.

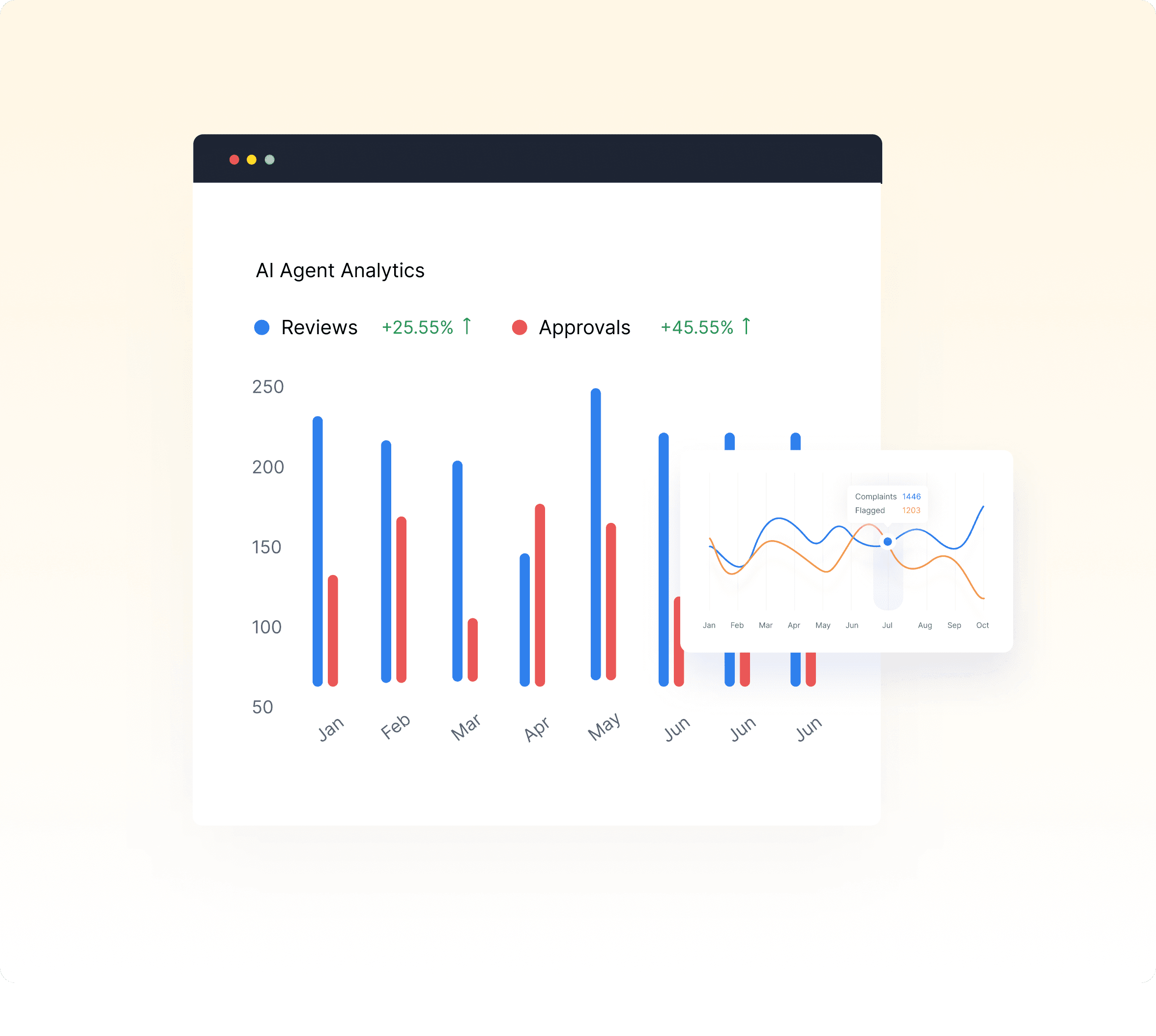

Empower decisions with analytics

Stay in the loop with extensive analytics

Each AI agent provides detailed analytics on its specific operations, while also contributing to a comprehensive overview of all compliance activities, enhancing decision-making and transparency.

Perfect visibility and control

Own your data with zero data retention. Get role-based permissions and least-privilege access for granular control and insight into product usage with user & agent activity reports.

Role-Based Permissions

Provide users with role-based access to essential data to ensure security.

User & Agent Activity Reports

Monitor user & agent interactions through detailed activity reports for valuable insights.

Dedicated Implementation

Your Standard Operating Procedures are implemented with a dedicated implementation team, including forward-deployed engineers.

Adaptable to your business needs and SOPs

White-Glove, Engineer-Led Implementation

Our forward-deployed engineers handle the end-to-end deployment and integration of custom AI agents—ensuring a smooth, secure, and scalable integration tailored to your institution's needs.

| Features | AI | BPO |

|---|---|---|

| 80+ languages | ||

| 100+ accents | ||

| Gender-based voice options | ||

| 24/7 availability | ||

| Adaptive conversational tone | ||

| Automated call scheduling and reminders | ||

| Real-time sentiment analysis | ||

| Dynamic script optimization | ||

| Multichannel support (phone, email, SMS, WhatsApp, etc.) | ||

| Unlimited scalability during peak periods | ||

| Instant onboarding and deployment | ||

| Advanced data analytics and reporting | ||

| Automated compliance checks | ||

| No overhead for training or office space | ||

| Built-in regulatory compliance | ||

| Comprehensive audit trail | ||

| Machine learning for better predictions | ||

| Integration with CRMs and ERPs | ||

| Cultural sensitivity training for AI | ||

| Ability to remember previous interactions |

AI Agents built with security in mind.

Security is a top priority, and we ensure that customer data is not used for model training.

We have strict measures in place to protect data privacy, adhering to industry standards and best practices to safeguard all information shared with us.

Best privacy practices, ingrained in our DNA.

Privacy is fundamental to our approach, and we are committed to safeguarding personal data.

We do not collect or store unnecessary information, and we employ robust security practices to ensure that customer data remains protected and confidential.

Your Forward-deployed AI partner.

We are at the forefront of innovation, consistently delivering cutting-edge AI solutions to ensure you stay ahead of the curve.

Layerup's team customizes Conversational AI Agents to adapt to your business needs. Not the other way around.

Integrations for AI Agent Interoperability.

Layerup AI Agents have access to and make decisions based on your company's integrations, just like a normal analyst would.

Latest News & Blogs

Explore our blog for the latest insights on how you can deploy AI within your enterprise for collections operations.

How Layerup's Memory-Baked AI Agent Platform Lets Financial Institutions Scale

By embedding memory as a core architectural component, Layerup's Agentic OS enables financial institutions to deploy progressively smarter AI agents that remember, learn, and scale across collections, claims, and servicing workflows.

Why Financial Institutions Need Industry-Specific AI Agents for Mission-Critical Workflows

Banks and insurers run mission-critical workflows that demand precision, auditability, and speed at massive scale. Generic AI tools improve productivity at the edges, but industry-specific AI agents can run the institution by combining domain grounding, workflow execution, integration, and compliance.

Layerup now supports GPT-5

Layerup's Agentic OS now supports OpenAI's GPT-5, delivering advances in decision intelligence, adaptive verbosity, deep reasoning, and secure API orchestration for enterprise-grade AI agents.

Ready to learn more? Schedule a call with an AI Agent expert.

Schedule a 30-minute call to see how you can deploy AI agents for your collections operations.